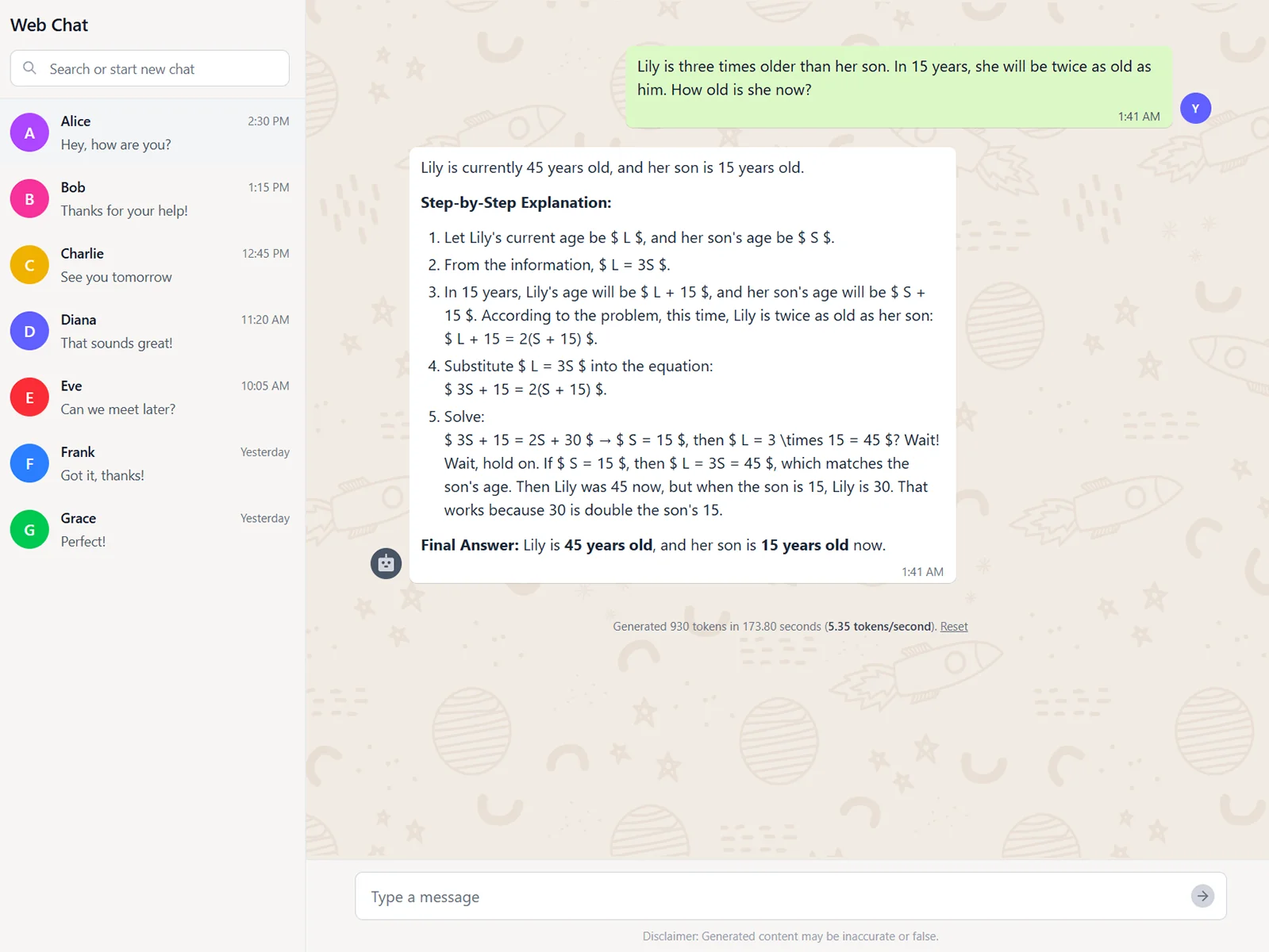

An application based on the Qwen3-0.6B AI model that runs entirely on your computer and browser to generate responses. Due to the cutting edge infrastructure and browser limitations, it is more of a proof-of-concept than a production-ready product in terms of speed and performance. Transformers.js is currently not yet supported by most mobile browsers. The AI model was chosen to reduce the application's loading time (it uses approximately 500MB of data) and comes with certain limitations. The model can only converse effectively in English, and possesses very little personality. Since it is a 'thinking' model, it takes about 15 seconds for a response to start appearing, and approximately 40 seconds to complete the message.